This X-ray/CT scan shows the placement of electrodes over the temporal lobe that scientists used to decode what the patient was hearing.

The day we can scan a person’s brain and “hear” their inner dialogue just got closer. Scientists at the University of California, Berkeley recorded brain activity in patients while the patients listened to a series of words. They then used that brain activity to reconstruct the words with a computer. The research could one day be used to help people unable to speak due to brain damage.

It’s not every day neuroscientists get a chance to record activity from the human brain. A group of 15 patients suffering from either epileptic seizures or brain tumors were already scheduled to undergo neurological procedures. The patients, all English speaking, volunteered for the study. After neurosurgeons cut a hole in their skulls, the research team placed 256 electrodes over the part of the brain that processes auditory signals called the temporal lobe. The scientists then played words, one at a time, to the patients while recording brain activity in the temporal lobe.

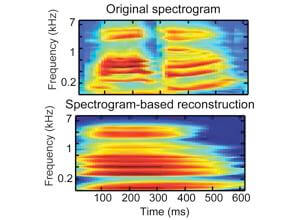

The auditory features of a sound can be characterized by what’s called a frequency spectrogram that measures the strength of different frequencies within the sound. The scientists hoped to use the pattern of brain activity while the word “partner,” for example, was played to generate a second spectrogram. Were it perfect, this process, called “stimulus reconstruction,” would reconstruct a frequency spectrogram identical to the original. However, because the temporal lobe is only one of several brain regions that process sound, the scientists did not expect an exact reconstruction.

Brian Pasley, post-doctoral fellow and lead author of the study, devised two different computer models for the stimulus reconstruction. Each was created according to different assumptions about how the brain processes sound. One model outperformed the other, enabling the computer to reconstruct the original word 80 to 90 percent of the time.

The study was published recently in PLOS Biology.

That’s music to the ears of people who can’t speak because of brain damage. Strokes or neurodegenerative diseases such as Lou Gehrig’s disease can leave people’s language centers damaged and impair their speech. A critical link between the current study and potentially helping these people is the idea that hearing words and thinking words activate similar brain processes. There is evidence to suggest that this is indeed the case, but more research is needed to work out exactly how perceived speech and inner speech are related. Even so, the current study lends hope to a potential treatment. “If you can understand the relationship well enough between the brain recordings and sound, you could either synthesize the actual sound a person is thinking, or just write out the worlds with a type of interface device,” Pasley told the Berkeley News Center.

Just last year researchers used brain implants to improve memory in rats. Others used an implant to help paralyzed rats walk again. The current study paves the way for yet another domain in which neural interfaces could one day be used to improve our lives.

[image credits: Adeen Flinker via UC Berkeley and modified from PLOS Biology]

image 1: brain waves

image 2: figure